The quest of understanding or inferring data beyond what has been acquired has been an age old statistics problem. And with understanding data, it’s non-trivial to be able to measure the “goodness” of a system with the task of predicting unseen data. We call this “goodness” term as the error. or rather the “badness” The two underlying features of this error are bias and variance aka the structural error and estimation error.

Structural Error - This type of error is incurred when the true underlying relationship between the data points is of a different structure than the predicted function’s structure. For example, if the data is non-linear but the predicted function is linear.

Estimation Error - In machine learning, this is the same as the training loss, and is usually incurred when the training dataset is very small. Increasing the number of training examples decreases this error. Estimation loss is always incurred when fitting the model such that it is more generalizable.

Estimators

In (Fisher (1922)), Fisher introduced the method of Maximum Likelihood Estimation (MLE) which can be understood using the following application of it in a toy problem:

The MLE method proposes that a good estimate of the mean can only be the sample itself, which does hold true in lower-dimensional gaussians but in higher dimensions, this fails drastically. notion of “good” explained later

A good estimator can be defined as one that reduces the L2-norm () distance between the true mean and the predicted mean.

This has been the explanation for a simple toy problem. Now let’s extend it for i.i.d. samples sampled from a gaussian for each . Now, to estimate for all such random variables with only the noisy samples for each , a trivial estimator can be .

Now, determining the “goodness” of this estimator can be done using the estimated loss value of this estimator:

This trivial estimator is suboptimal whenever (inadmissible) which means that there exists an estimator s.t. with .

Implicit Bias and Variance

Here we show that the notion of bias and variance is implicit in the definition of an error that classify both structural and estimation error. To see, let’s look at the formula of variance:

Introducing the mean

Subsequently, we realize the last equation as:

where is the L2-norm term. We see the mathematics behind the implicit nature of bias and variance in the loss function (L2-norm here). Here we also see that the bias produces a bigger change to the error and thus the derivative of the error w.r.t. bias is higher than the variance.

An error term such as the L2-norm that sums the different loss values of each estimation induces a bias-variance tradeoff. The loss function on itself has an inadmissible estimator i.e. . But with the case of the L2-norm, a shrinkage factor introduced by James and Stein decreases variance by introducing a bit of bias, therweby reducing the L2-norm.

Stein's paradox

We see that stein’s estimator given below utilizes the then-unknown fact that bias can be leveraged for variance to improve the error:

Instead of the common , there is a new term called the “shrinkage factor” that skews some data points towards the origin.[1]

Stein's influence in RL

Policy gradients

Recall that the stochastic reinforcement learning objective is to learn a policy distribution that maps states to actions. I will be assuming that the reader (you) knows a bit about RL but in case you don’t, refer to any deep RL book and you shall receive that wisdom soon enough. In policy gradients, we train a policy such as a deep neural network with weights and the weights of the network that maximize the objective function which is represented as . We can represent the gradient of the objective function that the model optimizes as (formulated below):

where each rollout is the trajectory of state-action pairs seen during a rollout in time-steps, and the probability of a trajectory can be defined as:

and the total reward accumulated during the rollout of :

The policy gradient approach is to directly take the gradient of this objective (Eq. ):

In practice, the expectation over trajectories can be approximated from a batch of sampled trajectories:

Here we see that the policy is a probability distribution over the action space, conditioned on the state. In the agent-environment loop, the agent samples an action from and the environment responds with a reward .

What is wrong with PG?

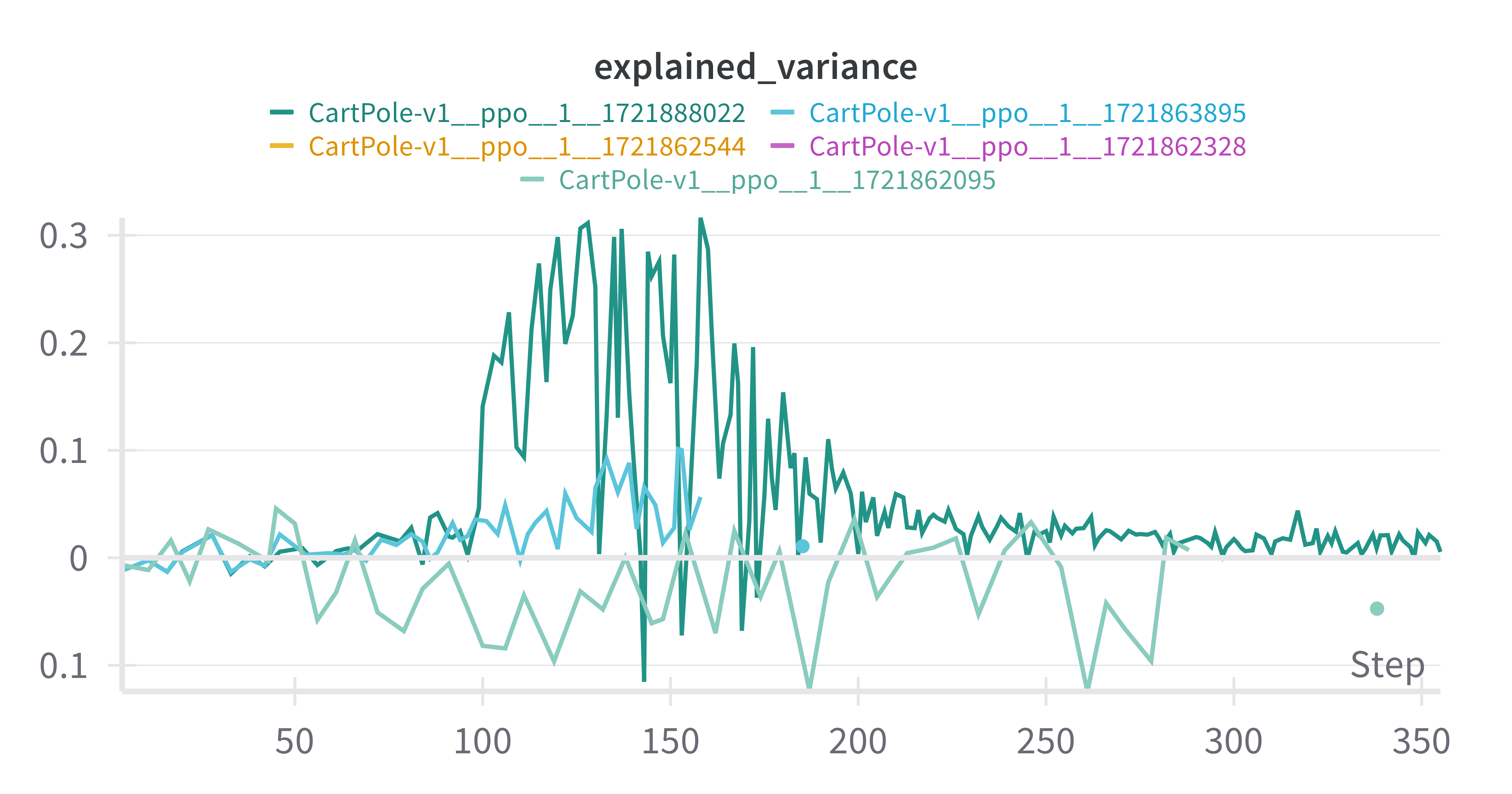

We see that the variance of the sample reward collected along a few trajectories in the space of all possible trajectories is high meaning that predicting the return of a trajectory becomes worse with better performing trajectories.[2]

A relatively high performing trajectory changes the distribution much more than a relatively less performing one. The trick is to minimize absolute return while maintaining the relationship of the relative rewards amongst trajectories. So the task is to reduce variance. One way we achieve this is by discarding the reward achieved at a previous time step.

What else is wrong with Policy Gradients?

We can notice that there is no regularizing term in the objective function (dissimilar to better ML objectives) and so the gradient can change parameters a lot.

The key problem is that some parameters are delicate as in they change a lot with a small change in the gradient step, so adding a regularizing term with a weight to each parameter is essential to a reliable performance.

| [1] | Doing so introduces more bias while reducing variance, but then why doesn’t this work for dimensions ? |

| [2] | You can find the code for this here. |

Variance Reduction

Reward-to-go

One way to reduce the variance of the policy gradient is to exploit causality: the notion that the policy cannot affect rewards in the past. This yields the following modified objective, where the sum of rewards here does not include the rewards achieved prior to the time step at which the policy is being queried. This sum of rewards is a sample estimate of the Q function (), and is referred to as the “reward-to-go”. The Q-function can also be definedas the estimate of expected reward if we take action in state .

Discounting

Multiplying a discount factor to the rewards can be interpreted as encouraging the agent to focus more on the rewards that are closer in time, and less on the rewards that are further in the future. This can also be thought of as a means for reducing variance (because there is more variance possible when considering futures that are further into the future). We saw in lecture that the discount factor can be incorporated in two ways, as shown below.

The first way applies the discount on the rewards form full trajectory:

Baselines

Another variance reduction method is to subtract a baseline (that is a constant with respect to ) from the sum of rewards:

This leaves the policy gradient unbiased because

This value function will be trained to approximate the sum of future rewards starting from a particular state:

so the approximate policy gradient now looks like this:

Generalized Advantage Estimation

The quantity from the pervious policy gradient expression (removing the index for clarity) can be interpreted as an estimate of the advantage function:

where is estimated using Monte Carlo returns and is estimated using the learned value function . is called as the advantage function. We can further reduce variance by also using in place of the Monte Carlo returns to estimate the advantage function as:

with the edge case . However, this comes at the cost of introducing bias to our policy gradient estimate, due to modelling errors in . We can instead use a combination of -step Monte Carlo returns and to estimate the advantage function as:

Increasing incorporates the Monte Carlo returns more heavily in the advantage estimate, which lowers bias and increases variance, while decreasing does the opposite. Note that recovers the unbiased but higher variance Monte Carlo advantage estimate used in (14), while recovers the lower variance but higher bias advantage estimate used in (16).

We can combine multiple -step advantage estimates as an exponentially weighted sum, which is known as the generalized advantage estimator (GAE). Let . Then we define:

where is a normalizing constant. Note that a higher emphasizes advantage estimates with higher values of , and a lower does the opposite. Thus, serves as a control for the bias-variance tradeoff, where increasing decreases bias and increases variance. In the infinite horizon case (), we can show:

where we have omitted the derivation for brevity (see the GAE paper for details). In the finite horizon case, we can write:

which serves as a way we can efficiently implement the generalized advantage estimator, since we can recursively compute:

Similarly, in the off-policy policy gradient methods, i.e. where the model is trained on a different dataset and learns new parameters . similar to fine-tuning in DL terms.

So we see that:

By using the causality trick, we reduce expectation thereby reducing variance and by adding baselines, we affect sample rewards by normalizing them, thereby reducing variance.

In GAE, we see that using the advantage function is the replace for the baseline function.

If the advantage function is incorrect, then the entire policy gradient can be biased which is OK because we can tradeoff a bit of bias for the large reduction in variance

References

R.A. Fisher On the Mathematical Foundations of Theoretical Statistics, The Royal Society 1922.

Peters, Jan, and Schaal, Stefan., Reinforcement learning of motor skills with policy gradients, Neural Networks 2008.

Further Reading

Antognini, Understanding Stein's paradox, 2021.

Jones, James-Stein estimator, 2020.

Chau, Demystifying Stein's Paradox, 2021.